Citation-Aware RAG: How to add Fine Grained Citations in Retrieval and Response Synthesis

TL;DR

This blog explores how to build citation-aware RAG pipelines. We’ll look at how document preprocessing, chunking, and embedding can be extended to capture spatial anchors, and how those anchors flow through retrieval and synthesis so the LLM can surface citations inline with its answers.

Citations are table stakes for agentic applications. Without them, users can’t trust the output. That’s why products like Perplexity and Google AI Search always return sources alongside answers. Even dev tools like Cursor cite line numbers and file names when suggesting code changes.

If you're already building RAG applications with custom pipelines or agentic framewokrs like LangChain, adding citations doesn't require rebuilding your system. The techniques below work as an enhancement layer on top of your existing retrieval setup.

At its core, generating citations in RAG is about preserving source information at index time. While for simple use cases the citation is a hyperlink or file reference, for long documents with hundreds of pages or thousands of tokens, just a file reference isn’t enough.

Precise citations, like linking claims to exact paragraphs, table cells, and figures, separate professional agentic applications from chatbot demos. Users don't just get answers; they get evidence.

How to Build Citation-Ready Document Chunks

Most RAG tutorials stop at converting documents into Markdown, chunking, and indexing. This works fine for demos but fails in production. The moment you need to verify sources, audit responses, or meet compliance requirements, basic chunking leaves you empty-handed. To support citation-aware RAG, every chunk needs to carry:

- File name

- Page number

- Spatial metadata (bounding boxes of each line, figure, or table)

The challenge is balancing fidelity with noise. If you add all spatial metadata directly into the chunk text, the content becomes polluted. But if you merge multiple lines into a larger chunk without citation anchors, you lose the ability to cite precise lines.

The solution: insert lightweight citation anchors into the text and store fragment-specific spatial metadata separately as chunk metadata. This keeps the text clean while preserving fine-grained citation targets.

Prerequisites: Document Parsing with Bounding Boxes and Vector Storage

Before building citation-aware retrieval, two capabilities are essential:

- OCR with Spatial Metadata

Converting PDFs or images to plain Markdown with models like Gemini Pro or OpenAI’s vision models makes fine-grained citations impossible. While these VLMs excel at text extraction, they don't return the grounding information needed for citations: bounding boxes, element coordinates, or spatial relationships between document components.

Bounding box coordinates for each document element are required to link responses back to source locations. Extracting text, tables, and images with bounding boxes is the spatial information that anchors citations to the exact location in the source.

- Metadata-Aware Storage

Vector databases like Pinecone, Qdrant, Weaviate, and PgVector support storing bounding boxes, page numbers, or paragraph IDs (any relevant and contextual metadata) alongside chunks, making them available during retrieval and accessible for your RAG application's end user.

Most existing RAG setups already store some metadata (file names, chunk IDs). Citation support extends this pattern by adding bounding box coordinates, typically adding ~10-15% to your storage overhead but enabling full source traceability.

Document Parsing: From Layout to Citable Chunks

Tensorlake's Document AI makes building citation-aware retrieval easy. The Document AI API parses a page into elements with:

- Content

- Page number

- Bounding boxes

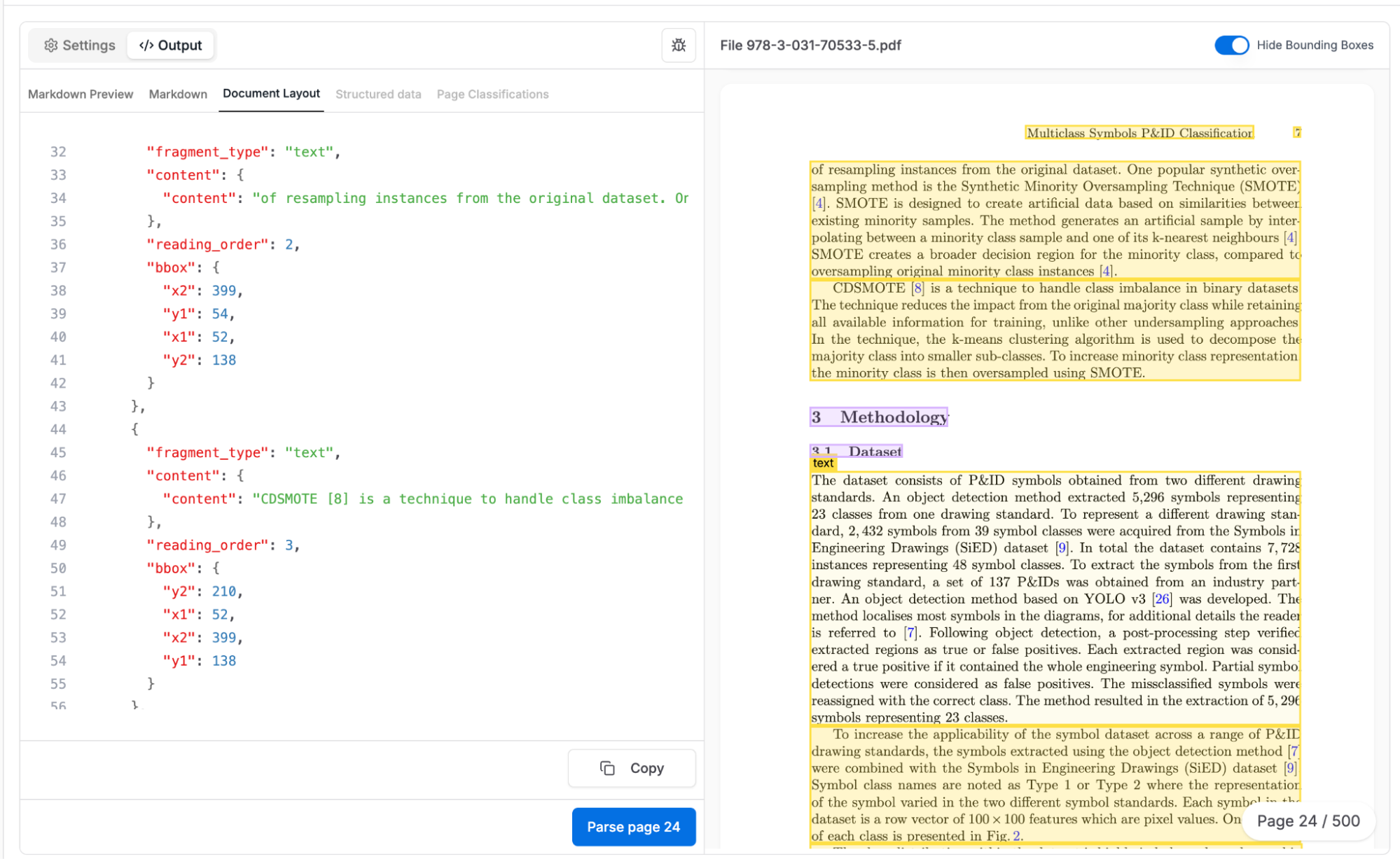

Let's look at an example. Here we have used Tensorlake to parse this research paper:

- On the left side is the complete document layout representation of the document; which includes the content and bounding box for each fragment.

- On the right, you can see the bounding boxes overlayed.

The document layout returned from the parse endpoint contains all the information you need to create context-aware RAG:

- Pages The document layout is a list of pages. Each page contains a page number, dimensions, a page classfication reason (optional), and a list of page fragments. The page numbers and dimensions can be referenced by each of the page fragments for each page object.

- Page Fragments Each page fragment contains a fragment type (e.g.

section_header,table,text), content, bounding box coordinates, and reading order. Using the fragment type can help contextualize fragments when chunking.

When you're ready to create chunks, you can iterate through page fragment objects and create appropriately sized chunks by combining them. As you create the chunks, you can create contextualized metadata to help during retrieval.

For example, with research papers, you might want your chunks to represent sections within the paper. So while you iterate through the page fragments, you would create a metadata object for each section that contains:

1[.code-block-title]Section metadata[.code-block-title]{

2 "title": title,

3 "start_page": page_num,

4 "end_page": page_num,

5 "header_bbox": header_bbox or {}

6}Then, when you create the chunks, you could include the anchors in the actual text and the spatial information in the metadata. You can leverage the contextual information by injecting inline anchors.

For example, you could inject citation anchors <c>2.1</c> or <c>2.2</c> which represent <c>[page_num].[reading_order]</c>. These anchors can then be used to reference the spatial information stored in the metadata associated with the embedding.

So, for example, the paragraphs shown in the Tensorlake UI above would get the added anchors:

1[.code-block-title]Chunk text[.code-block-title]

2of resampling instances from the original dataset. One popular synthetic over-

3sampling method is the Synthetic Minority Oversampling Technique (SMOTE) [4].

4SMOTE is designed to create artificial data based on similarities between

5existing minority samples. The method generates an artificial sample by inter-

6polating between a minority class sample and one of its k-nearest neighbours [4].

7SMOTE creates a broader decision region for the minority class, compared to

8oversampling original minority class instances <c>2.1</c> CDSMOTE [8] is a

9technique to handle class imbalance in binary datasets. The technique reduces

10the impact from the original majority class while retaining all available

11information for training, unlike other undersampling approaches. In the

12technique, the k-means clustering algorithm is used to decompose the majority

13class into smaller sub-classes. To increase minority class representation, the

14minority class is then oversampled using SMOTE. <c>c2.2</c>And would contain the metadata:

1[.code-block-title]Chunk metadata[.code-block-title]{

2 "citations": {

3 "2.1": { "page": 23, "bbox": { "x1": 12, "y1": 15, "x2": 149, "y2": 328 } },

4 "2.2": { "page": 23, "bbox": { "x1": 12, "y1": 35, "x2": 360, "y2": 400 } }

5 }

6}This approach adds minimal overhead to the chunk text while still letting the retriever and LLM map answers back to exact locations in the source. It’s all you need in the preprocessing stage to enable citation-aware RAG.

This chunking strategy works with standard RAG frameworks. If you're using LangChain's RecursiveCharacterTextSplitter, for example, you can adapt these techniques by modifying how you construct your Document objects to include the citation metadata.

The retrieval side stays familiar. Whether you're using similarity search, hybrid retrieval, or reranking. Your existing query engines don't need changes. The citation magic happens in two places: how you structure chunks (above) and how you prompt the LLM (below).

Returning Citations with LLM Responses

Once your chunks carry anchors, retrieval doesn’t really change. You can use the same dense, hybrid, or reranker setup you already have. The real magic happens during response generation.

If you're building the full RAG application, there are two small additions you might want to consider:

- Hide the anchors in prose, while keeping them in output.

Upon retrieval, the LLM will see the chunks with inline markers like <c>2.1</c>, but you can instruct it: "don’t print section anchors in your sentences — just return them as citation IDs." This will ensure the results are leveraging the anchors, but your user experience is cleaner.

- Turn IDs into clickable evidence.

When the model outputs the citation IDs, you can look them up in your metadata store. Because of how you stored the data, each ID maps back to a page and bounding box. From there you can generate a deep link or highlight that takes the user straight to the source.

For example, you could prompt the LLM with something like this:

1[.code-block-title]Model prompt (simplified)[.code-block-title]Answer the question using the context below.

2Ignore the <c>…</c> tags in your writing, but list them in a "citations" array.

3

4Context: … CDSMOTE oversampling <c>2.1</c> …Which will then return something like this:

1[.code-block-title]Model output[.code-block-title]{

2 "answer": "CDSMOTE reduces class imbalance by clustering the majority class and oversampling the minority.",

3 "citations": ["2.1"]

4}Then, before returning the answer to your user, you resolve the citation "2.1" to the spatial information {page: 2, bbox: …} and surface this information as a link or highlight in your UI.

This prompting pattern works across different LLMs (OpenAI, Anthropic, local models) and can be adapted for different frameworks. In LangChain, for example, you'd modify your prompt template.

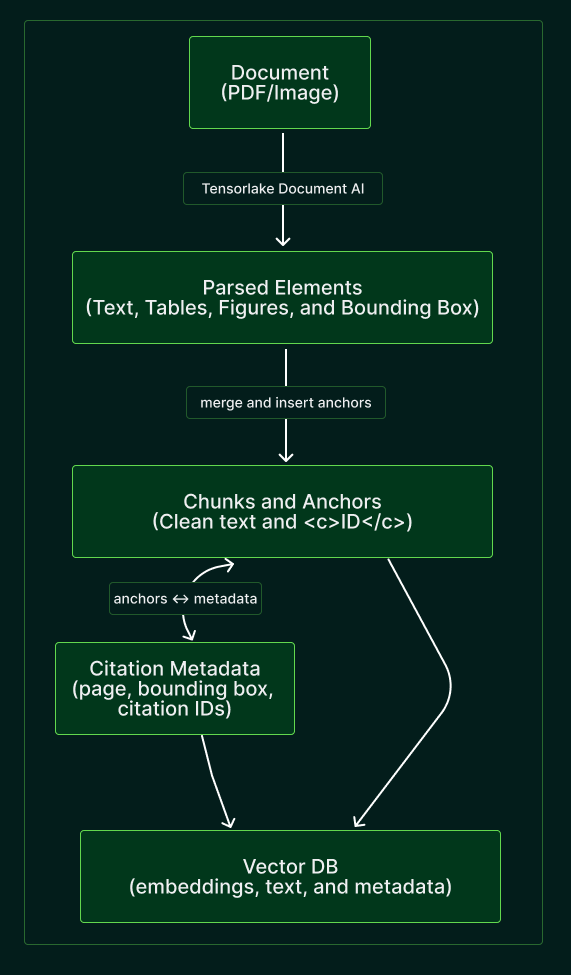

The full workflow would look like this:

And that’s it! Most of the heavy lifting for citation generation happens in preprocessing: embedding spatial information into chunks and storing it as metadata in the vector store. On the retrieval side, it’s just a matter of resolving citation IDs back to their coordinates and rendering them in the UI or turning them into links to the source.

Beyond RAG: Citations in Structured Data Extraction

The citation techniques we've covered work perfectly for conversational RAG applications. But what if you need structured data extraction with citations? Think financial reports where you extract specific metrics, or legal documents where you pull contract terms; scenarios where you need both the extracted values and proof of where they came from.

Tensorlake's Structured Extraction API solves this with a single parameter.

Unlike building citation pipelines manually (which requires coordinating parsing, chunking, retrieval, and response generation), this API handles the entire citation chain in a single call, similar to how Anthropic's Claude or OpenAI's structured outputs work, but with source linking.

1[.code-block-title]Structured extraction with citations[.code-block-title]from tensorlake.documentai import DocumentAI, StructuredExtractionOptions

2from pydantic import BaseModel, Field

3

4class FinancialMetrics(BaseModel):

5 revenue: float = Field(description="Annual revenue")

6 net_income: float = Field(description="Net income")

7 eps: float = Field(description="Earnings per share")

8

9doc_ai = DocumentAI()

10

11structured_extraction_options = [

12 StructuredExtractionOptions(

13 schema_name="FinancialMetrics",

14 json_schema=FinancialMetrics,

15 provide_citations=True # <-- Citations for every field

16 )

17]

18

19result = doc_ai.parse_and_wait(

20 file="path/to/10k-filing.pdf",

21 structured_extraction_options=structured_extraction_options

22)The output includes bounding box coordinates for every extracted field:

1[.code-block-title]Cited structured output[.code-block-title]{

2 "revenue": 394328000000,

3 "revenue_citation": [

4 {

5 "page_number": 47,

6 "x1": 125,

7 "y1": 340,

8 "x2": 245,

9 "y2": 365

10 }

11 ],

12 "net_income": 93736000000,

13 "net_income_citation": [

14 {

15 "page_number": 47,

16 "x1": 125,

17 "y1": 400,

18 "x2": 245,

19 "y2": 425

20 }

21 ]

22}This lets you trace every extracted field back to its source location in the document. Every number in your database can link back to its exact source location in the original document.

The same citation principles apply: you get page numbers, bounding boxes, and verifiable evidence, but without building the citation infrastructure yourself. You can learn more about this in our blog post on structured output citations.

Build Citation-Ready Agentic Applications Today

Citation-aware RAG isn't just about trust, it's about building agentic applications that can be audited, verified, and deployed in production with confidence. Whether you're processing legal documents, financial reports, or research papers, users need to see the evidence behind AI-generated insights.

Ready to get started? We've created resources to help you implement citation-ready RAG:

Complete Tutorial Notebook

Follow our step-by-step Jupyter notebook that walks through building a citation-aware RAG system from scratch. Includes document parsing, chunking with spatial anchors, vector storage, and response generation with verifiable citations.

→ Access the RAG Citations Notebook

Technical Documentation

Dive deeper into Tensorlake's document parsing and structured extraction APIs. Learn about spatial metadata, bounding box coordinates, and integration with popular vector databases.

Join Our Community

Have questions about implementing citations in your RAG pipeline? Want to share your use case or get help with integration? Join hundreds of developers building the next generation of verifiable agentic applications.

Related articles

Get server-less runtime for agents and data ingestion

Tensorlake is the Agentic Compute Runtime the durable serverless platform that runs Agents at scale.