Building HackerNews Podcast Generator with Gemini 3, Elevenlabs

TL;DR#

This article shows how to build a simple podcast generator that turns Hacker News posts into short audio summaries using a single Tensorlake Application. The entire agent workflow runs on Tensorlake to achieve reliable execution and scalable data preparation, including web scraping, text cleaning, summarization, and audio generation. Gemini and ElevenLabs are invoked as external services from within Tensorlake functions for summarization and text-to-speech.

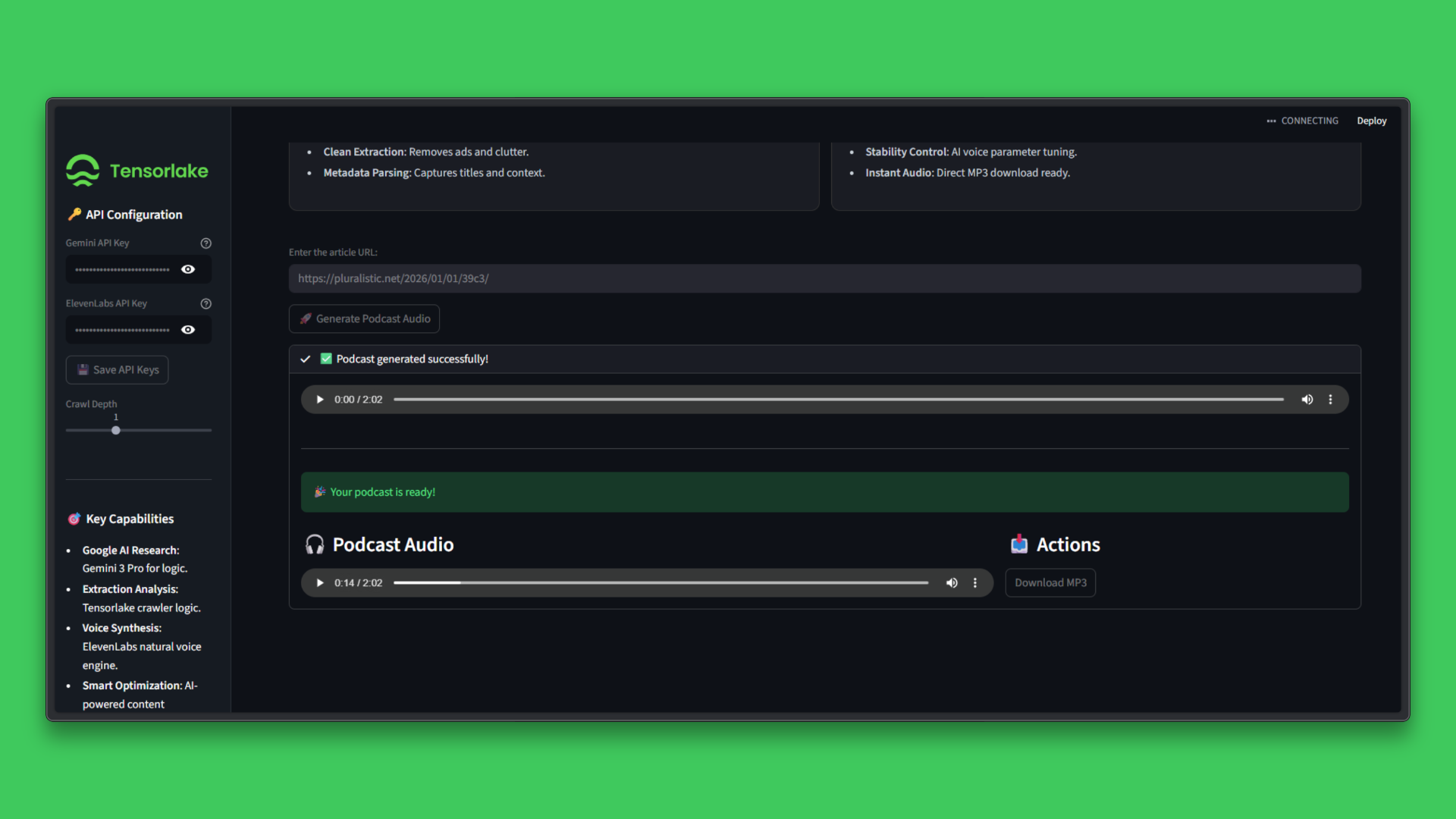

Here's the demo:

I spend a lot of time on Hacker News, but I rarely have the time to read every post and the long articles they link to. On many days, I just want a quick way to understand what people are discussing without opening multiple tabs and skimming through everything.

That led me to build a small podcast generator. The idea is simple: collect content linked from Hacker News, distill it into short summaries, and turn those summaries into audio that I can listen to while doing other things.

What I wanted to explore in this project was not just summarization or text-to-speech, but how to run an agent workflow cleanly and reliably. Instead of stitching together scripts, background jobs, and retries by hand, I wanted a single execution model where data preparation and tool invocation are orchestrated predictably.

In this project, the entire agent workflow runs as a single Tensorlake Application. Tensorlake is used as the execution runtime that coordinates scraping, text preparation, summarization, and audio generation. Gemini and ElevenLabs are invoked from within this workflow as external services, while Tensorlake manages execution, orchestration, and outputs.

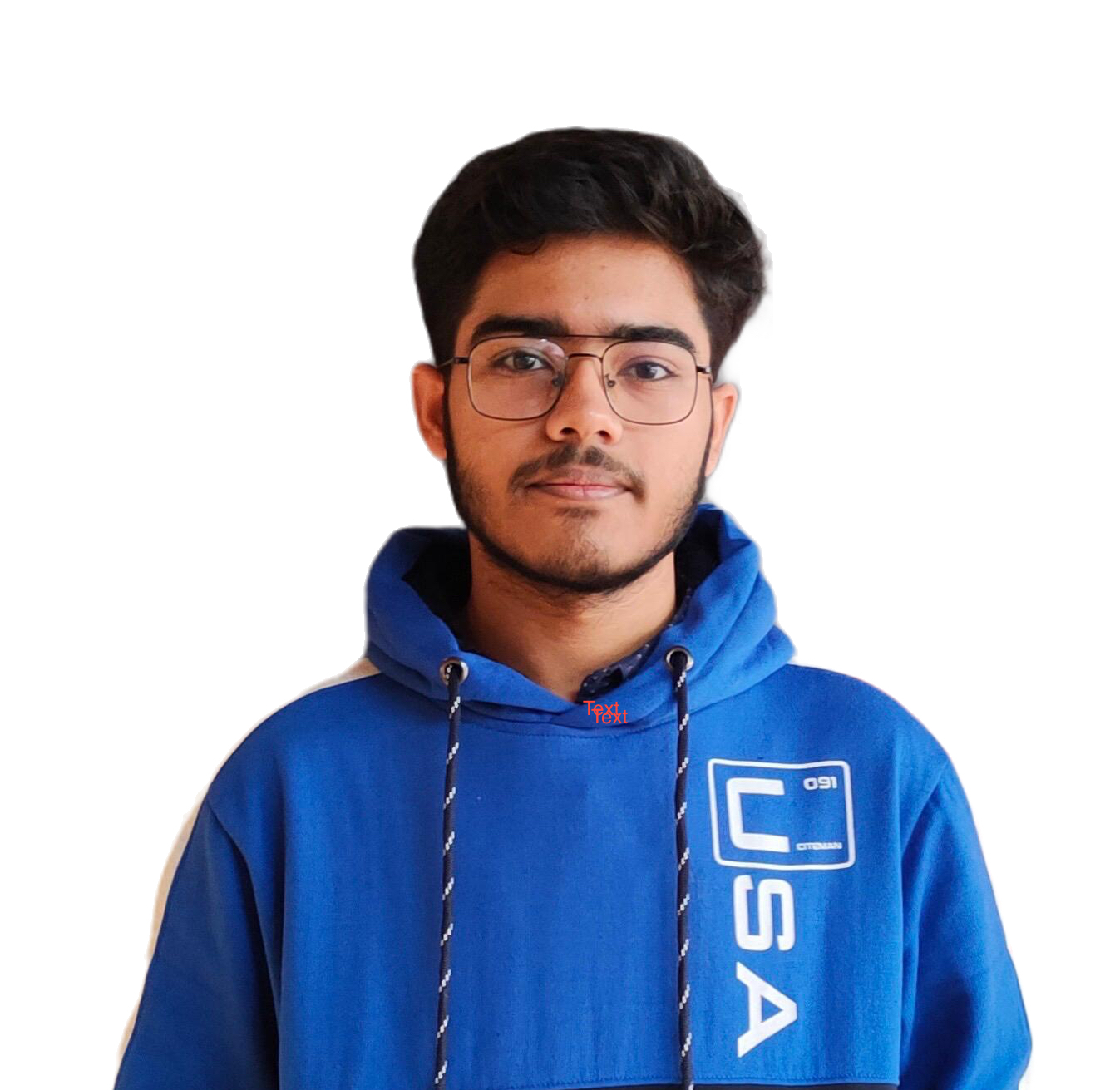

Architecture Overview#

The system is designed as a single, end-to-end workflow that transforms trending links into short, podcast-style audio summaries.

- Content retrieval and preparation: Links sourced from Hacker News are fetched and converted into clean, readable text so they can be reliably processed by downstream steps.

- Text summarization: The prepared content is sent to a large language model to generate concise summaries suitable for audio narration.

- Audio generation: These summaries are then converted into spoken audio using a text-to-speech service.

- End-to-end execution: All steps run as part of one coordinated workflow, ensuring that data flows consistently from content ingestion to final audio output, with intermediate results preserved across stages.

Why Tensorlake Runs the Agent#

This project requires running multiple execution steps in sequence, including content retrieval, text normalization, summarization, and audio generation. These steps must be coordinated reliably as part of a single agent workflow rather than as loosely connected scripts or background jobs.

These requirements are execution focused rather than business logic-focused, which makes Tensorlake a good fit for running the agent.

- Durable function execution: Each step of the podcast agent runs as a durable Tensorlake function. For example, if the summarization step fails due to a transient Gemini API error, Tensorlake retries only that function. Previously completed steps, such as web scraping and text preparation, are not re-executed. This makes the workflow resilient to partial failures without requiring the entire pipeline to be restarted.

- Serverless orchestration with dynamic fan out: The scraping stage handles a variable number of articles or links discovered at runtime. The workflow coordinates content retrieval and preparation within a single managed execution.

- Built-in orchestration and state handling: Tensorlake manages the ordering and data flow between steps. Scraped content flows into text preparation, then into summarization, and finally into audio generation, all as a single, managed execution. Retries, timeouts, and dependencies between functions are handled by Tensorlake rather than by custom glue code.

- Native support for large inputs and outputs: The workflow produces outputs of different sizes, including full article text, summary scripts, and MP3 audio files. Tensorlake supports function inputs and outputs of arbitrary size, so the agent does not need special handling for large text payloads or audio files.

Together, these capabilities allow the podcast agent to be implemented as a single Tensorlake Application composed of multiple Tensorlake Functions, with external services like Gemini and ElevenLabs invoked only where needed.

Building the Podcast Generator#

Now, we’ll build a simple podcast generator that takes an article linked from Hacker News, extracts and cleans its text content, summarizes it using Gemini, and then converts the summary into audio using ElevenLabs.

In this project, we build a single Tensorlake application where every step is implemented as a Tensorlake function. These functions are composed into one end-to-end agent that crawls content, prepares clean text, generates a podcast script, and produces the final audio file output.

Prerequisites#

Before starting, make sure you have the following:

- Python 3.11 or later

- Gemini API Keys

- ElevenLabs API Keys

All steps below are executed locally using Python.

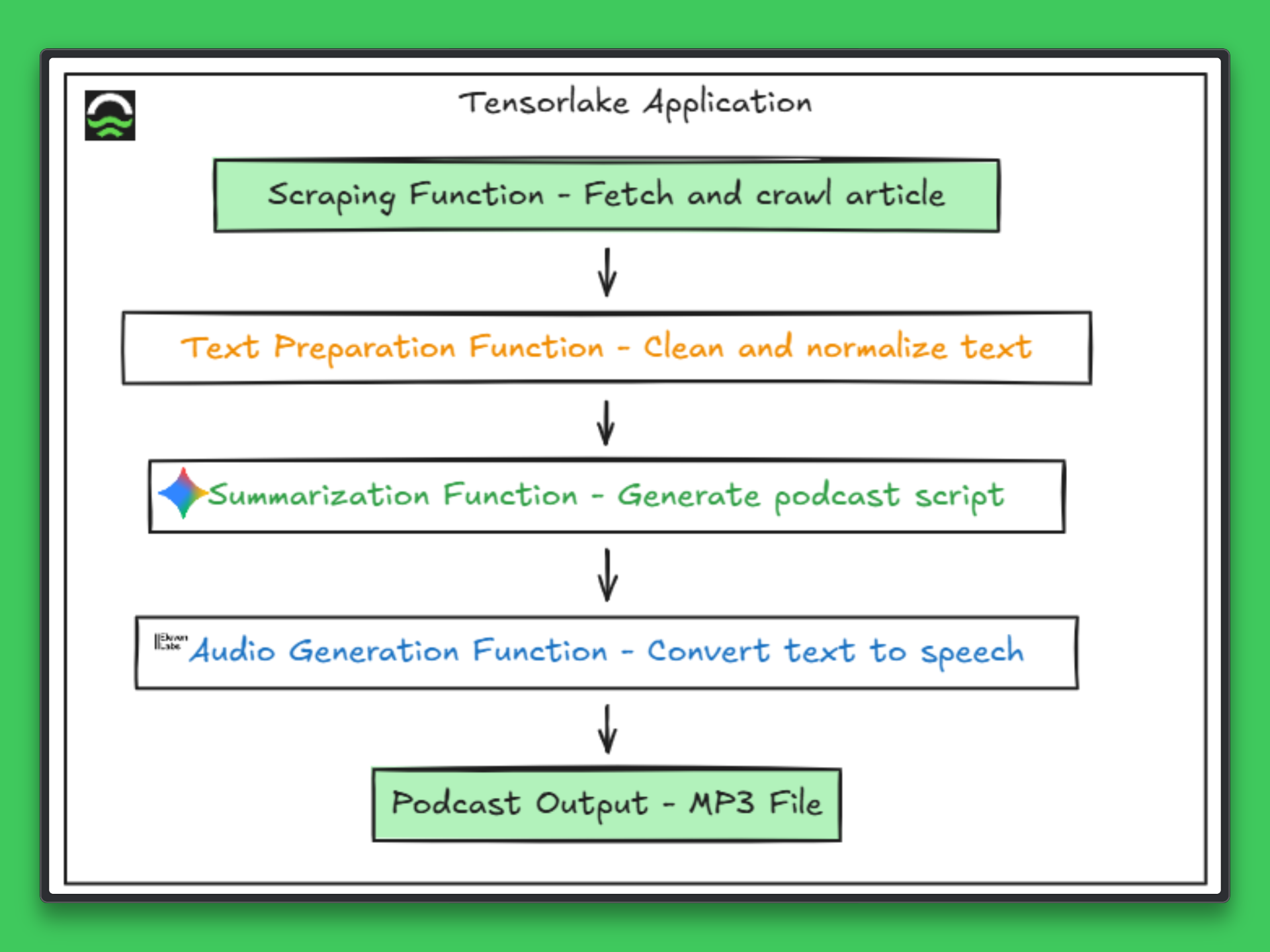

Generate a Gemini API Key

- Go to https://aistudio.google.com/

- Create an API key

- Keep it available for the next step

- Replace the key in your

.envfile

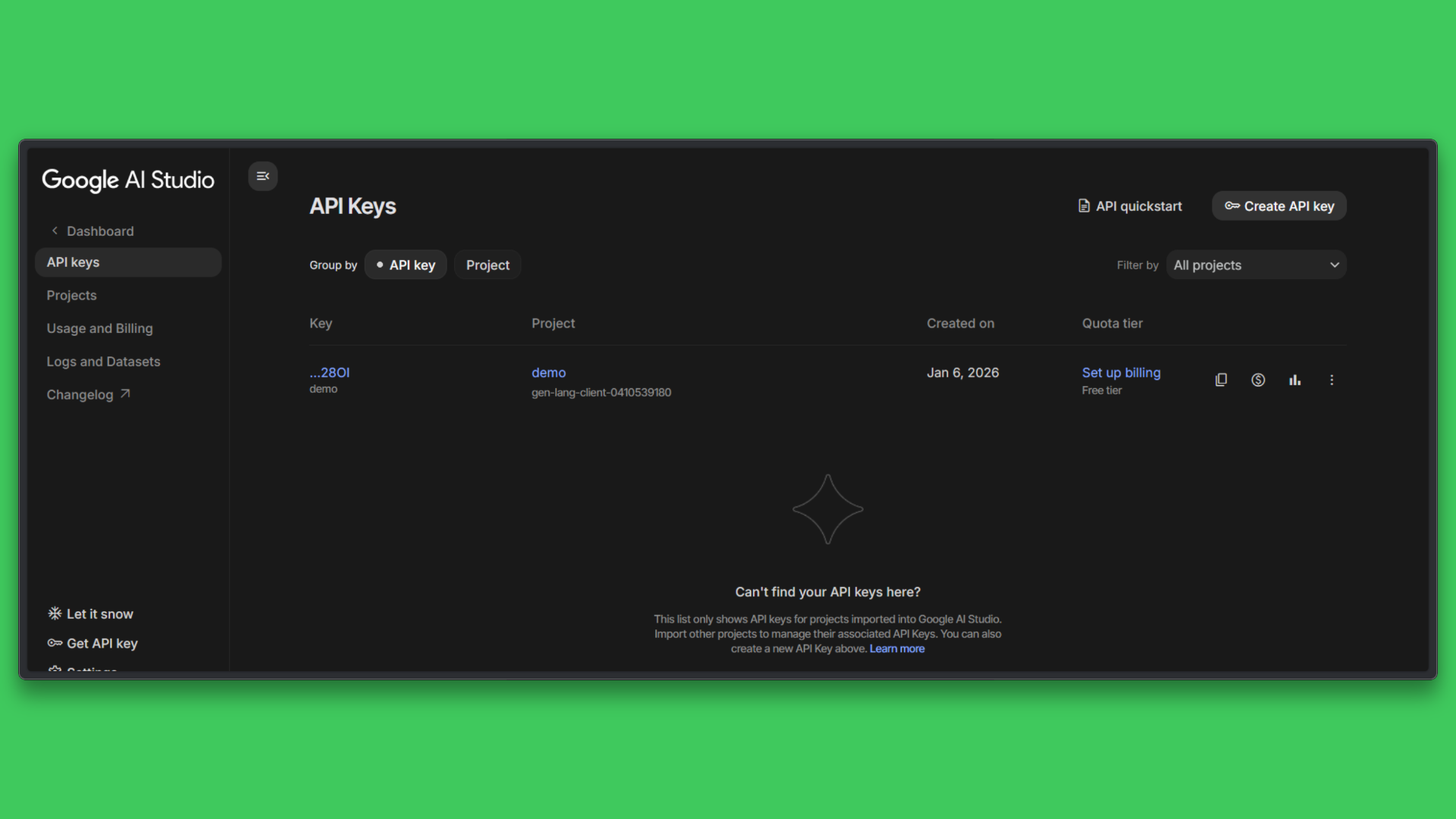

Generate an ElevenLabs API Key

- Go to https://elevenlabs.io/app/developers/api-keys

- Create an account

- Generate an API key from your profile

- Replace the key in your

.envfile

Step 1: Set Up a Virtual Environment#

Create a new project folder and open it in your editor.

Then create and activate a virtual environment.

python -m venv venv

Activate the environment:

On Windows:

venv\Scripts\activate

On macOS or Linux:

source venv/bin/activate

Once activated, your terminal should show that the virtual environment is in use.

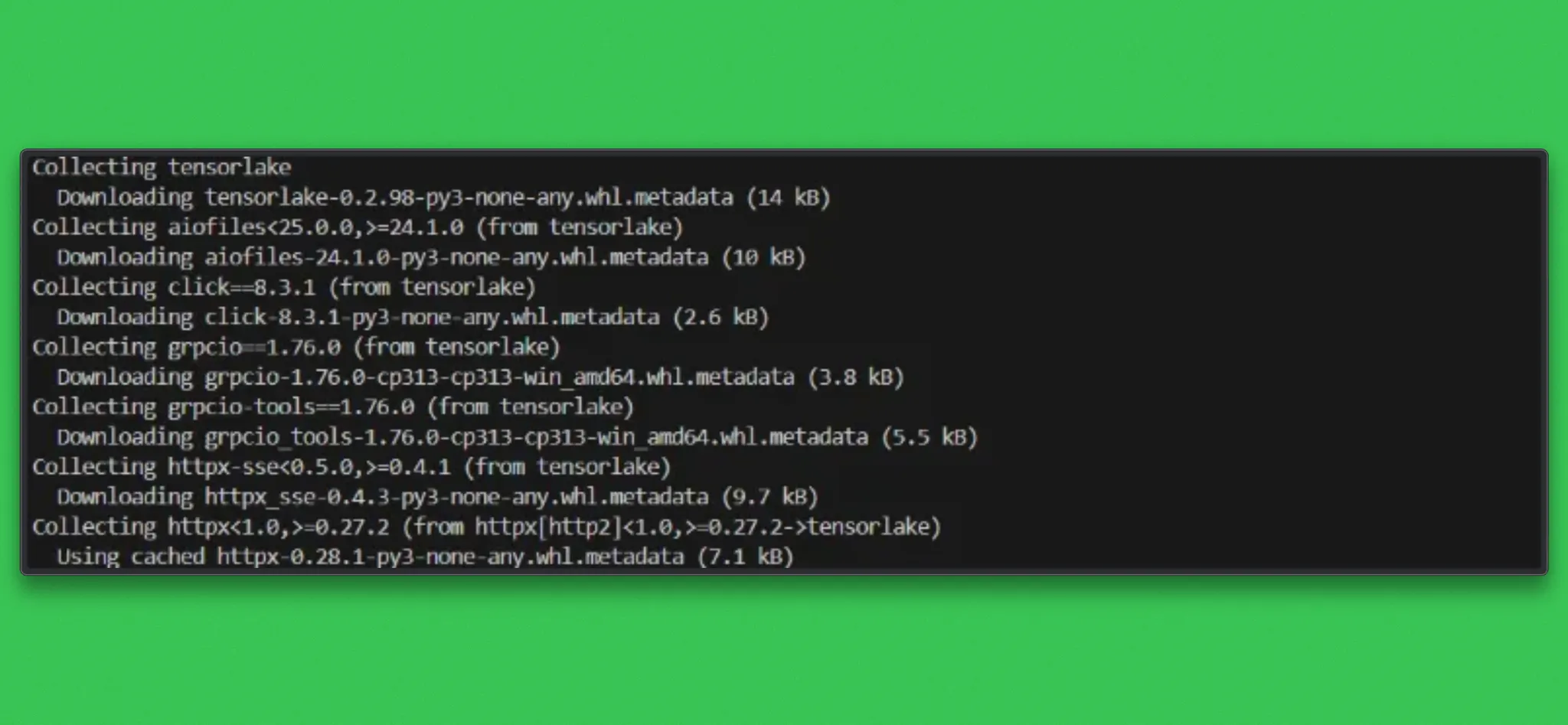

Step 2: Install Dependencies#

Create a requirements.txt file in the web-scraper folder with the following content:

tensorlake

pydoll-python

streamlit

google-genai

requests

python-dotenv

Install the required dependencies:

pip install -r requirements.txt

This installs Tensorlake, the headless browser dependency used by the scraper, and the libraries required for Gemini and ElevenLabs integration.

Create the .env file

The .env file is used to securely store API keys and configuration values outside the source code, and it will be referenced in the following steps when integrating Gemini and ElevenLabs.

In the same directory as create a file named:

.env

Add the following exact variable names:

GEMINI_API_KEY=PASTE_YOUR_GEMINI_API_KEY_HERE

ELEVENLABS_API_KEY=PASTE_YOUR_ELEVENLABS_API_KEY_HERE

Now, create a Python file named podcast_agent.py

Step 3: Web Scraping with Tensorlake#

The scraping step is implemented as a Tensorlake Function within a Tensorlake Application. This function performs a depth-first crawl starting from a given URL, with configurable limits such as max_depth and max_links.

Content fetching is executed inside a dedicated scraper image that includes Chromium and PyDoll, allowing JavaScript-rendered pages to be loaded reliably during execution. Each page fetch runs as an isolated function invocation, so failures on individual pages do not terminate the overall crawl.

HTML pages are processed into clean, readable text, while binary resources such as images or PDFs are detected and encoded with metadata. The crawler maintains domain boundaries and avoids revisiting previously processed links to keep execution bounded and predictable.

By running scraping as a Tensorlake Function rather than a standalone application, the crawler becomes a composable workflow step that feeds structured content directly into downstream summarization and audio generation functions.

Refer to the full code here

Step 4: Summarization with Gemini#

Once the crawl completes, a Tensorlake function extracts clean, readable text from the scraped results. This step normalizes the data and prepares it for language model input by removing empty content and consolidating text across pages.

The summarization step uses Gemini through the google-genai client. The cleaned article text is passed to the gemini-2.5-flash model with a prompt designed to generate a concise, podcast-style script. Input size is intentionally limited to remain compatible with free or low-tier usage.

Gemini is used strictly as an external inference service, while Tensorlake manages execution, orchestration, and data flow between functions.

@function(secrets=["GEMINI_API_KEY"])

def summarize_with_gemini(clean_text: str) -> str:

"""

Generate a podcast-style summary.

"""

from google import genai

import os

client = genai.Client(api_key=os.environ["GEMINI_API_KEY"])

prompt = f"""

Create a short podcast-style summary of the following article.

Keep the tone clear, neutral, and easy to listen to.

Article:

{clean_text[:6000]}

"""

response = client.models.generate_content(

model="gemini-2.5-flash",

contents=prompt

)

return response.text

Step 5: Audio Generation with ElevenLabs#

The final step converts the generated podcast script into audio using ElevenLabs. A dedicated Tensorlake function reads the text content and sends it to the ElevenLabs Text-to-Speech API using a fixed voice ID.

The model used is eleven_v3, configured with stability and similarity settings to produce clear and natural narration. The resulting MP3 audio is returned as a file object, completing the podcast generation pipeline.

This separation allows audio generation to be retried or swapped with different voices or models without modifying the rest of the application.

@function(secrets=["ELEVENLABS_API_KEY"])

def generate_audio(script_text: str) -> File:

"""

Convert podcast script text into audio using ElevenLabs TTS.

"""

import os

import requests

VOICE_ID = "21m00Tcm4TlvDq8ikWAM"

url = f"https://api.elevenlabs.io/v1/text-to-speech/{VOICE_ID}"

headers = {

"xi-api-key": os.environ["ELEVENLABS_API_KEY"],

"Content-Type": "application/json",

"Accept": "audio/mpeg",

}

payload = {

"text": script_text,

"model_id": "eleven_v3",

"voice_settings": {

"stability": 0.5,

"similarity_boost": 0.5,

},

}

response = requests.post(url, json=payload, headers=headers)

if response.status_code != 200:

raise RuntimeError(

f"ElevenLabs TTS failed: {response.status_code} {response.text}"

)

return File(

content=response.content,

content_type="audio/mpeg",

)

)

The complete implementation, including the Tensorlake application, scraping logic, summarization agent, and audio generation pipeline, is available in the project repository:

Repository: https://github.com/tensorlakeai/examples/tree/main/podcast-agent

This structure makes it easy to extend the agent with additional processing steps or deploy it as a cloud-hosted Tensorlake application.

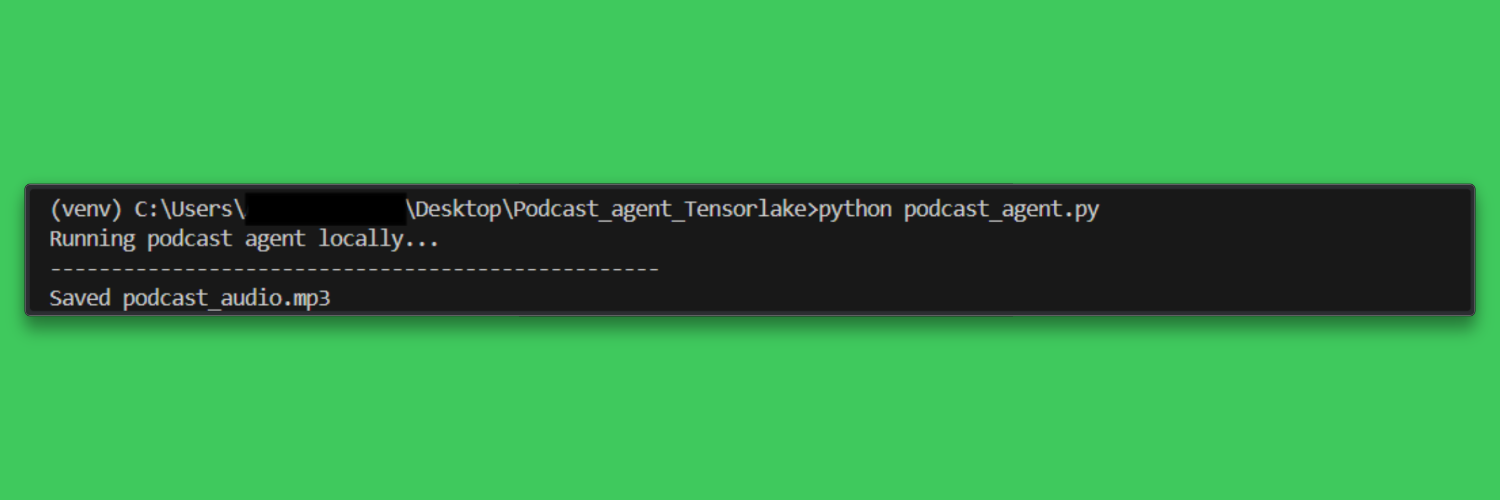

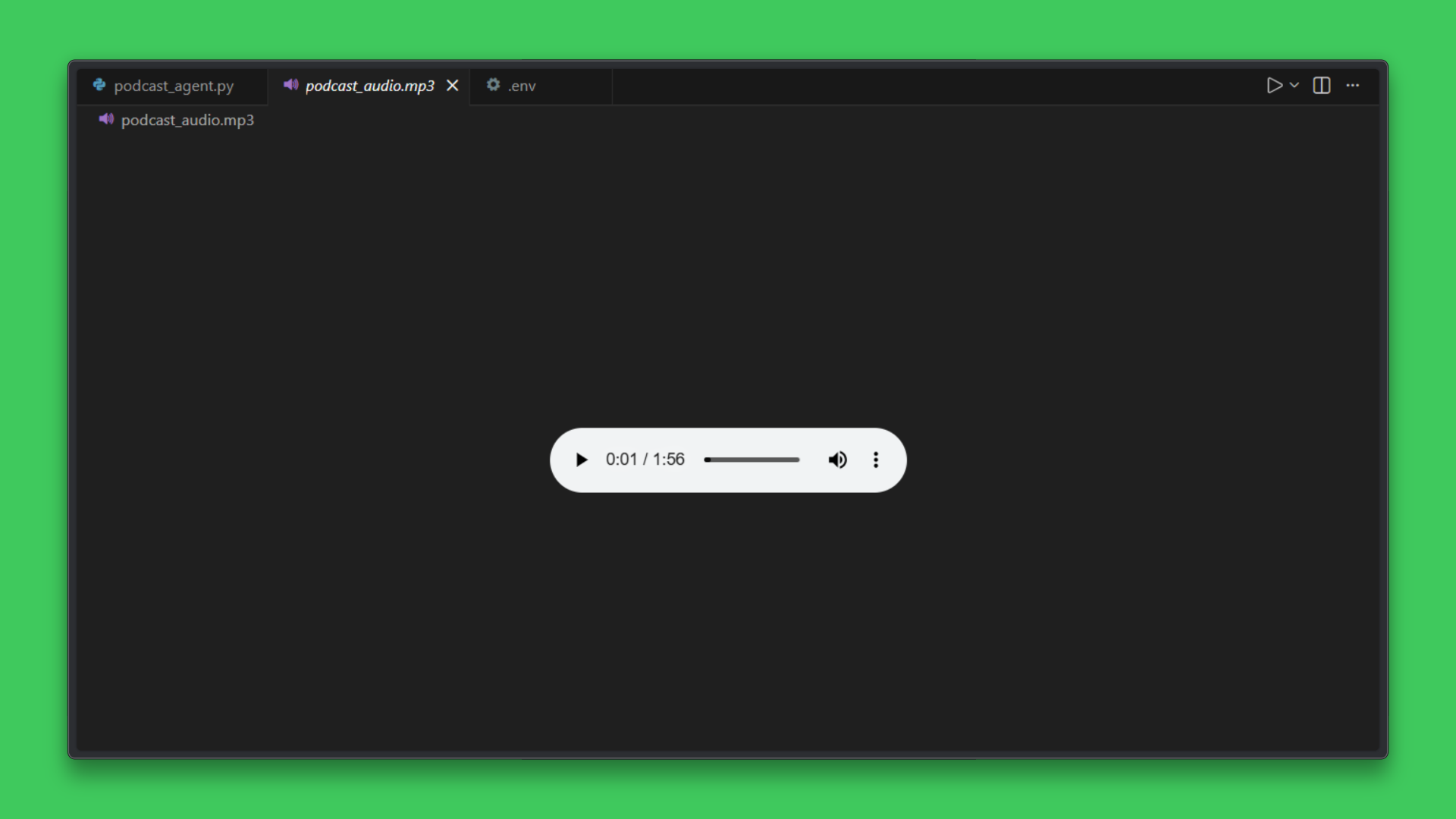

Run the script:

python podcast_agent.py

You should now see podcast_audio.mp3 in the folder. Playing this file confirms the end-to-end pipeline works.

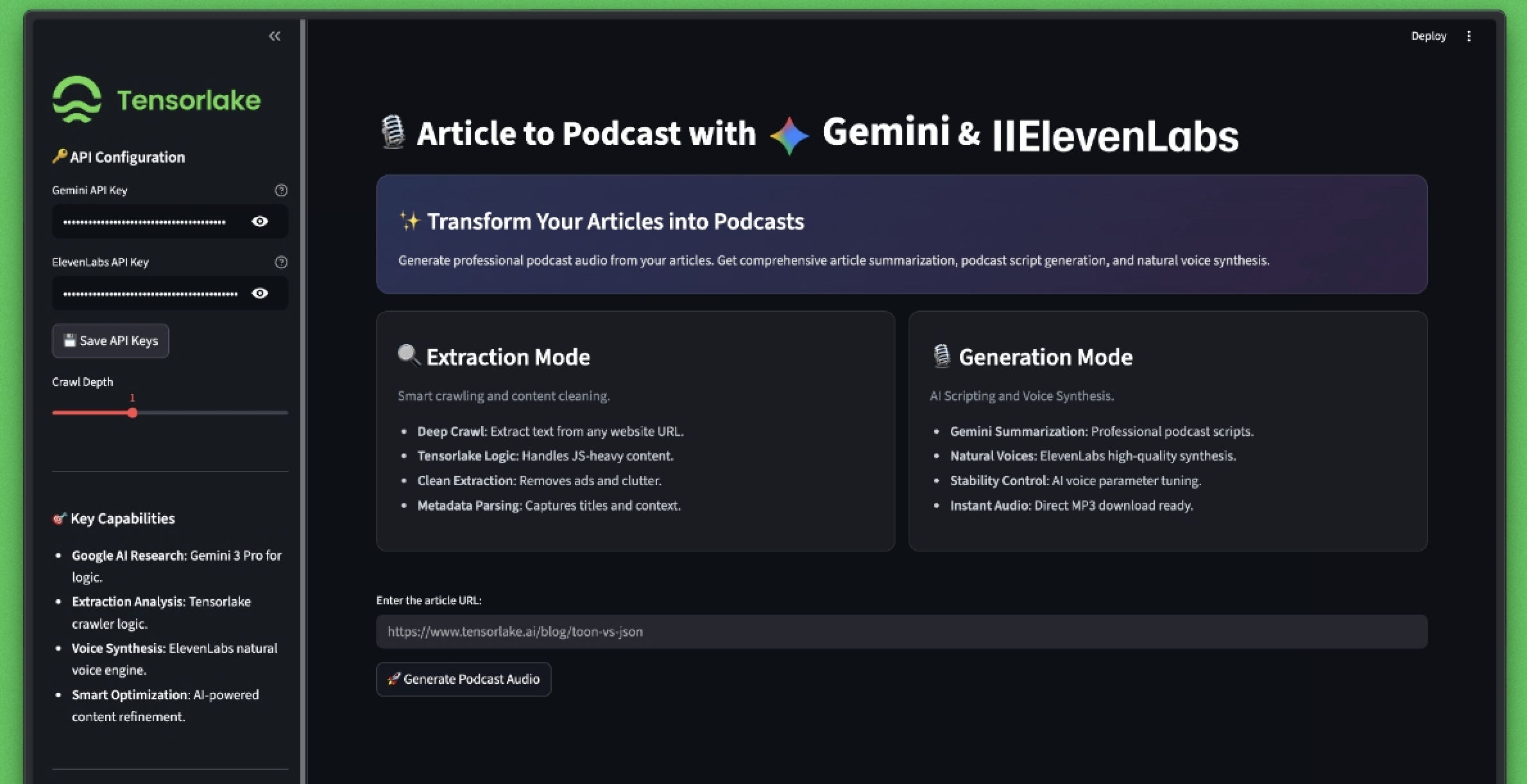

Creating the UI#

Now that the backend workflow is in place, the next step is to build the user interface. The UI is created using Streamlit and acts as a simple layer that allows users to interact with the Tensorlake powered podcast generation pipeline by providing an article URL and basic configuration.

The interface focuses on clarity and ease of use. All core processing, such as crawling, summarization, and audio generation, runs inside the Tensorlake application, while the UI only triggers the workflow and displays the final podcast audio with playback and download options.

For the full implementation, refer to the app.py file in the repository.

Installing Streamlit

Install Streamlit in the same virtual environment used for the project:

pip install streamlit

Now run the file app.py using the command below:

streamlit run app.py

We’ll get an interface like this:

If we give an article link, it generates a podcast.

Deploying to the Tensorlake Cloud#

Login#

Authenticate with Tensorlake from your terminal:

tensorlake login

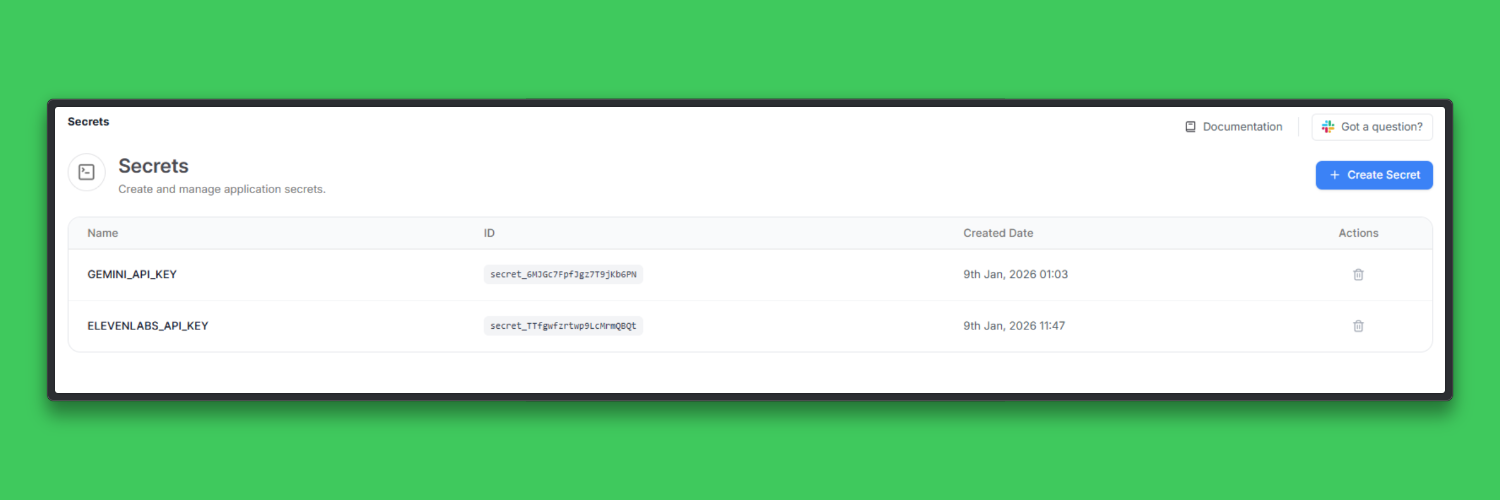

Set Secrets#

The podcast agent uses external services (Gemini and ElevenLabs), so secrets must be configured.

Option A: Using Tensorlake UI

- Go to Agentic Apps → Secrets

- Add the following secrets:

GEMINI_API_KEYELEVENLABS_API_KEY

- Save the changes

Option B: Using CLI

tensorlake secrets set GEMINI_API_KEY=your_gemini_key

tensorlake secrets set ELEVENLABS_API_KEY=your_elevenlabs_key

Secrets are securely injected into the functions at runtime.

Verify that the secrets are set correctly:

tensorlake secrets list

Export Tensorlake API Key#

If required for local testing or automation, export your Tensorlake API key:

export TENSORLAKE_API_KEY=tl_apiKey_xxxxxxxxx

This allows the CLI and local execution helpers to communicate with Tensorlake.

Deploy the Agent#

Deploy the application using the same podcast_agent.py file:

tensorlake deploy podcast_agent.py

During deployment:

- Tensorlake validates the application and functions

- Container images are built

- The agent is registered under Agentic Apps

On successful deployment, Tensorlake returns a permanent endpoint for the agent:

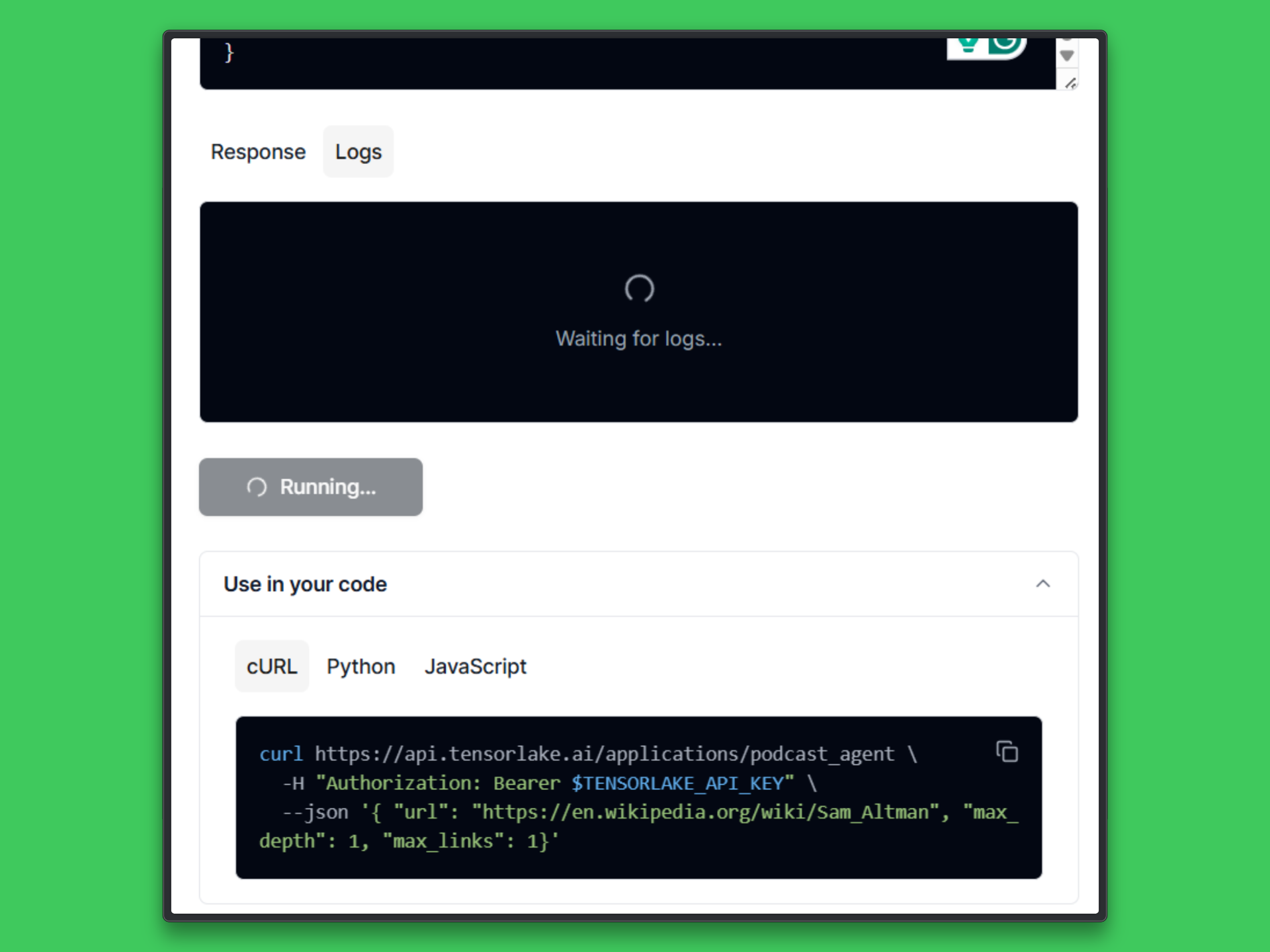

Invoke the Agent#

Option A: Using Tensorlake UI

- Open Agentic Apps → Your App

- Click Invoke

- Provide input parameters (example):

Option B: Using CLI

curl https://api.tensorlake.ai/applications/podcast_agent \

-H "Authorization: Bearer $TENSORLAKE_API_KEY" \

--json '{ "url": "example_string", "max_depth": 3, "max_links": 5}'

After you invoke, a Request ID will be generated.

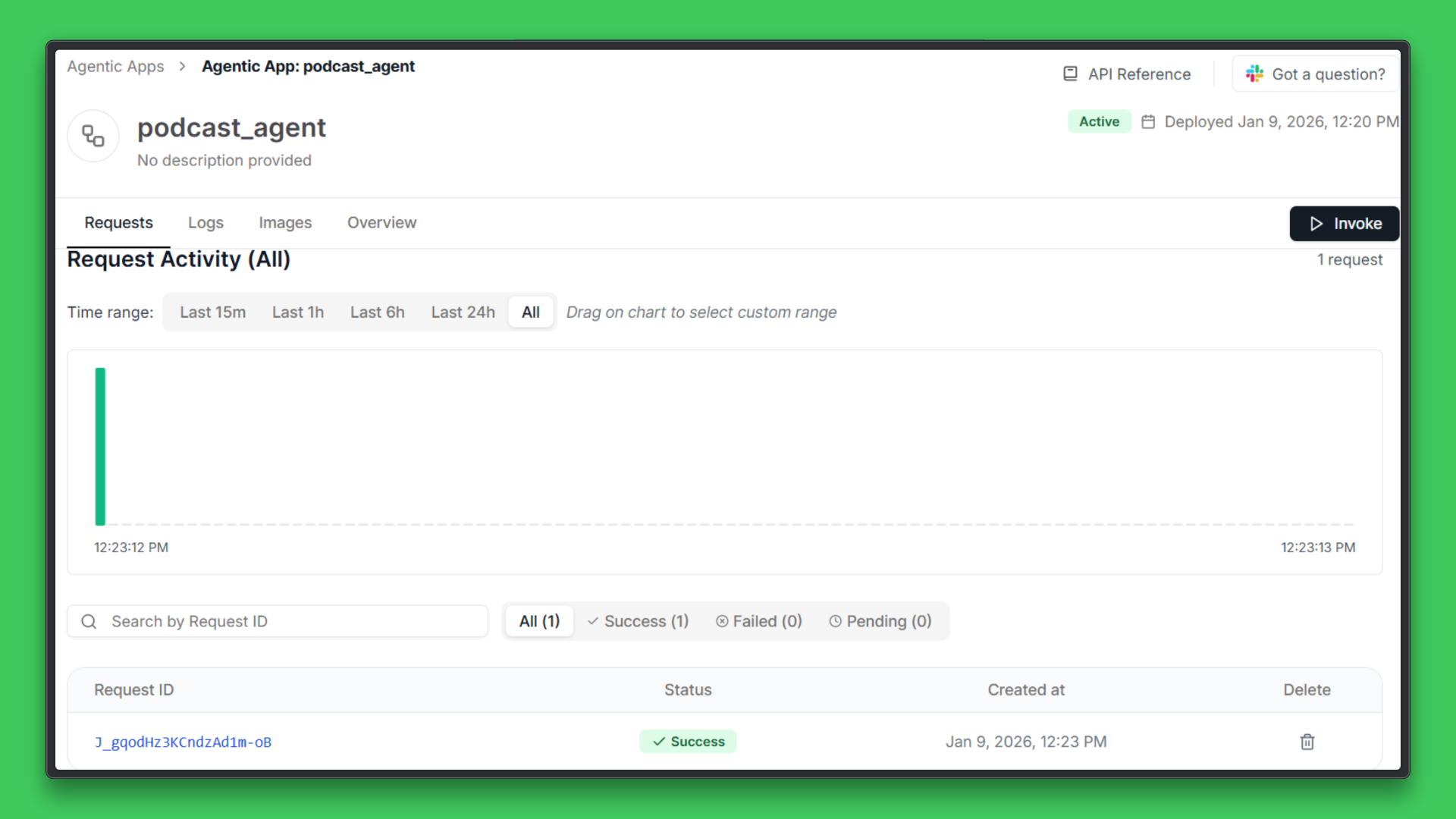

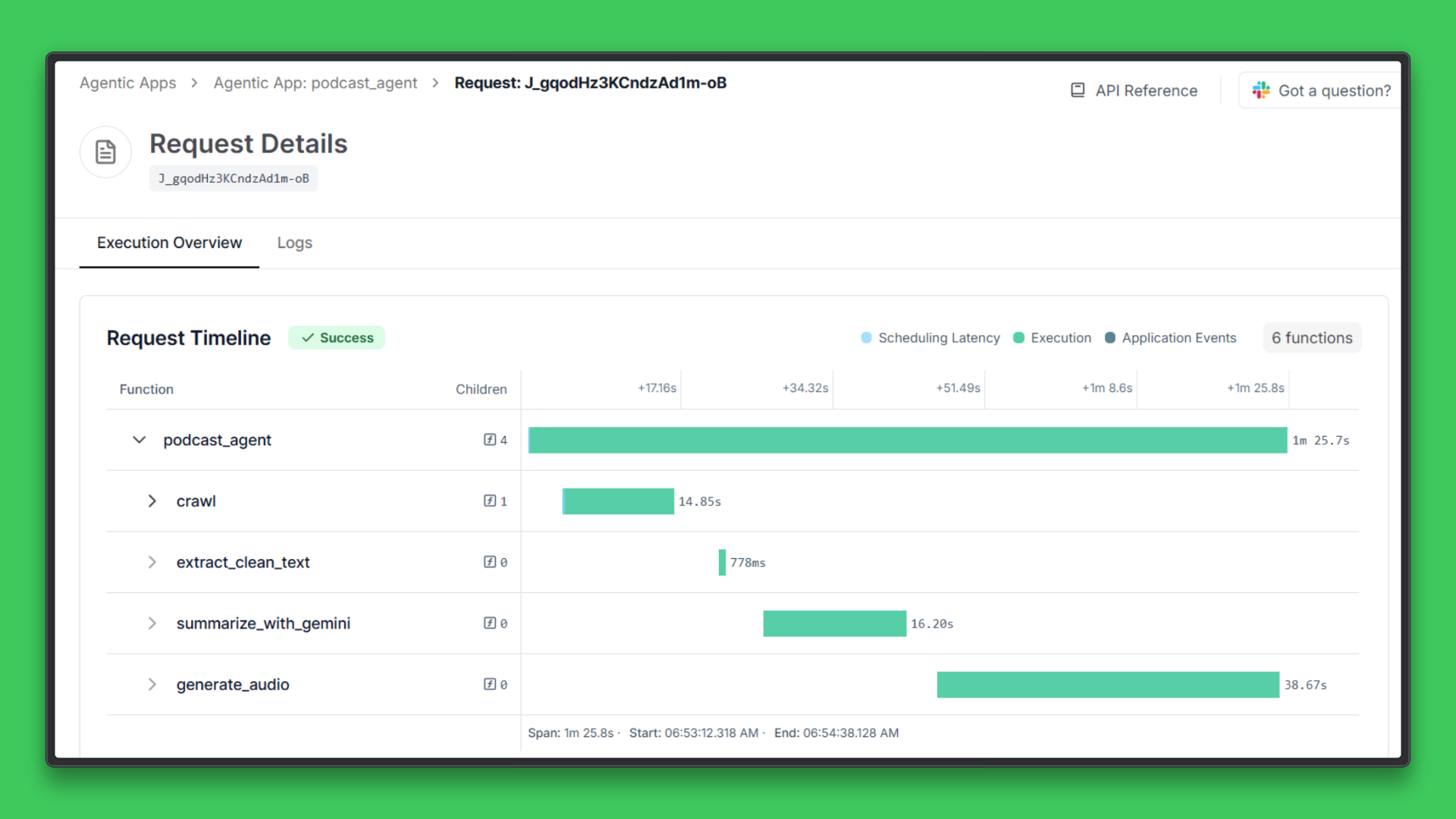

Observe Execution (Graph & Timing)#

After invocation, Tensorlake provides a full execution overview, including:

- Observable function graph

- Execution timing per function

- Clear parent → child function relationships

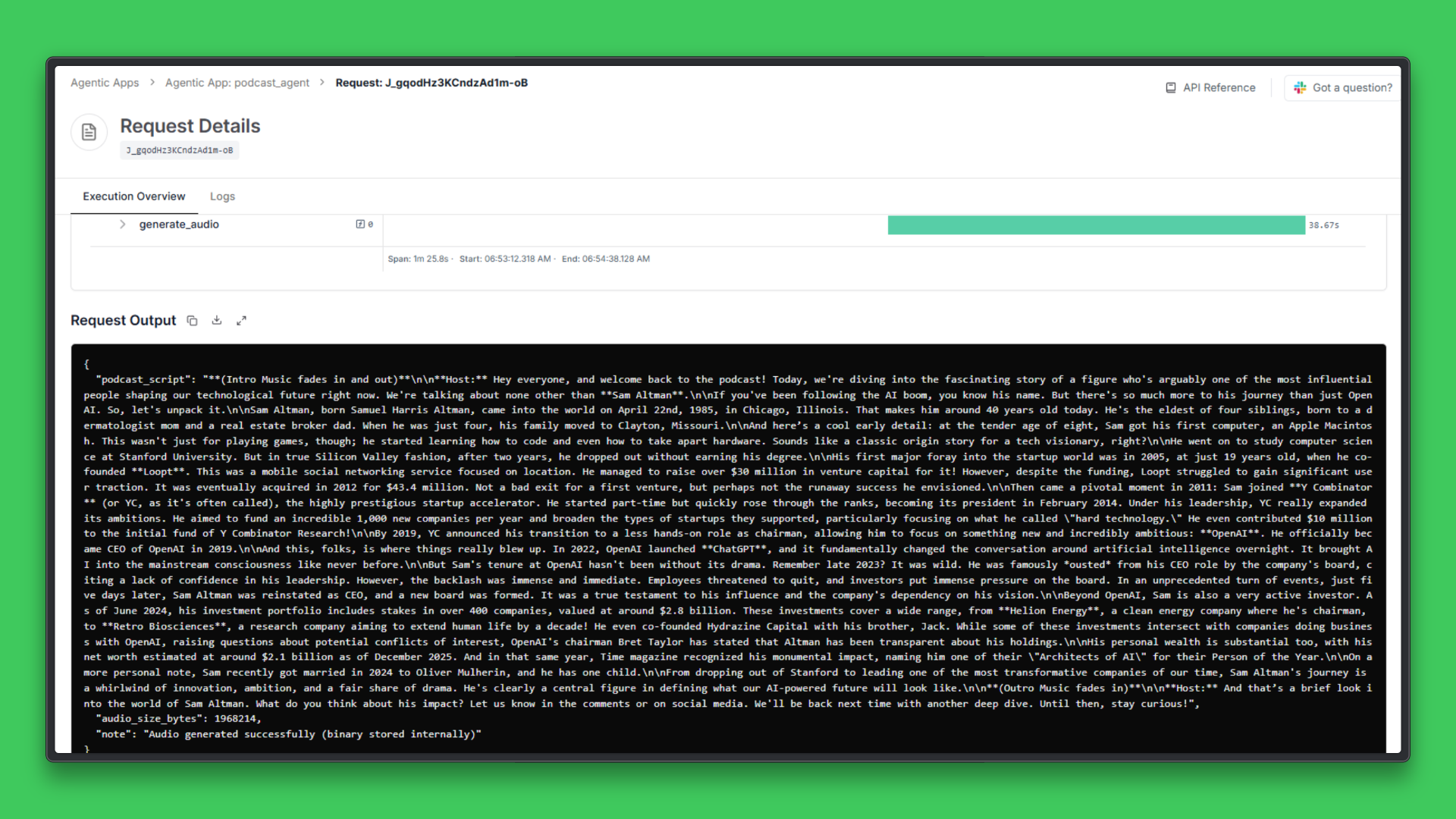

At the end of the workflow, you have:

- A fully crawled and processed website

- A clean podcast-style script generated by Gemini

- A high-quality MP3 narration generated via ElevenLabs

- Full observability into execution timing and function behavior

Key Takeaways#

- This project demonstrates how an end-to-end agent can move from raw web content to a finished podcast using a clear, structured workflow.

- Tensorlake serves as the execution backbone of the system, running the scraper, coordinating intermediate steps, and handling data preparation reliably.

- By separating scraping, summarization, and audio generation into distinct Tensorlake functions, the pipeline remains easy to reason about, debug, and extend.

- External models such as Gemini and ElevenLabs are used only for inference, while Tensorlake manages orchestration, retries, and execution state.

- The same architectural pattern can be applied to other content workflows such as automated briefings, research digests, or narrative summaries.

If you want to build similar execution-heavy AI workflows without managing infrastructure, explore what Tensorlake offers for running tools, preparing data, and scaling agent-style applications.

Start with the Tensorlake Applications Quickstart, experiment with the cookbooks, and see how far you can take this pattern with your own use cases.

Arindam Majumder

Developer Advocate at Tensorlake

I’m a developer advocate, writer, and builder who enjoys breaking down complex tech into simple steps, working demos, and content that developers can act on. My blogs have crossed a million views across platforms, and I create technical tutorials on YouTube focused on AI, agents, and practical workflows. I contribute to open source, explore new AI tooling, and build small apps and prototypes to show developers what’s possible with today’s models.